LeNet-5#

LeNet-5 is an early and very influential type of convolutional neural network (CNN) developed by Yann LeCun and his colleagues in 1998, designed mainly to recognize handwritten digits like those in the MNIST dataset. What makes LeNet-5 special is how it combines several clever ideas that allow it to efficiently and accurately understand images despite their complexity—ideas that were crucial stepping stones for today’s deep learning revolution.

The input to LeNet-5 is a small grayscale image, sized 32 by 32 pixels. This size is chosen to comfortably cover handwritten digits centered in slightly smaller fields. Each pixel in the image is normalized between 0 and 1, which helps the network learn faster and better.

LeNet-5 is made up of seven layers (not counting the input layer). These layers include:

Convolutional layers: These layers use small filters (for example, 5x5 pixels) that slide over the image looking for simple patterns like edges or corners. The first convolutional layer creates 6 “feature maps,” which are like six different filtered views of the image highlighting different features. The next convolutional layer creates 16 feature maps from the previous outputs, discovering even more complex patterns.

Pooling layers: After each convolutional layer, an average pooling layer shrinks the size of these feature maps by taking average values from small patches (2x2 pixels). This process helps reduce the complexity of the data and makes the network less sensitive to small shifts or distortions in the input.

Fully connected layers: Toward the end, all the spatially flattened features are fed through fully connected layers. These layers operate like a traditional neural network, combining all the spatial features to figure out what digit the image represents. The last layer has 10 output neurons, each corresponding to a digit from 0 to 9, and the network predicts which one is most likely present.

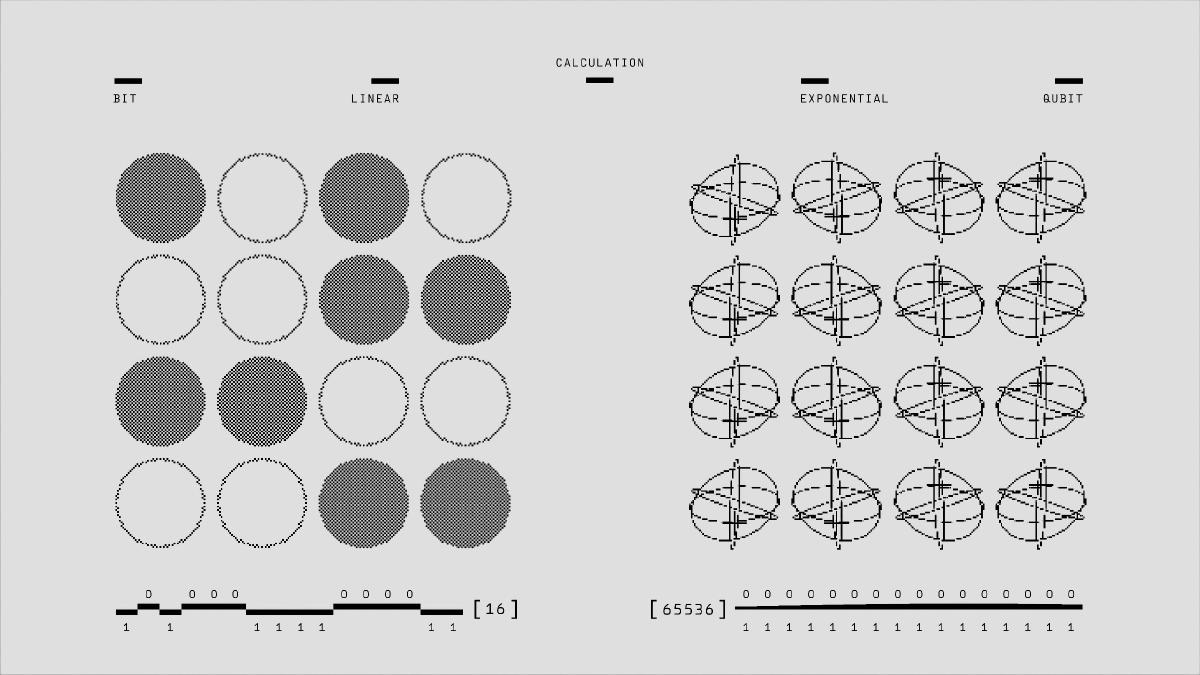

One of the major breakthroughs of LeNet-5 was how it addressed two big problems from earlier neural networks. First, it used the convolution and pooling operations to achieve spatial invariance, meaning the network could recognize parts of an image no matter where they appeared. Second, it greatly reduced the number of parameters that needed to be trained by sharing weights in convolution, making the network feasible to train with the computing power available at the time.

LeNet-5’s success demonstrated that neural networks could learn useful features directly from raw image pixels, instead of relying on handcrafted features, and could be trained end-to-end using backpropagation. This made it practical for real-world applications, like automatic check reading by banks.

More than a decade later, the ideas behind LeNet-5 set a foundation for AlexNet (2012), which scaled up the design dramatically. AlexNet used deeper networks with many more convolutional and fully connected layers, introduced the ReLU activation function (which allowed faster and more effective training), and used larger datasets like ImageNet along with powerful GPUs. This combination led to a huge leap in image recognition performance and sparked the modern era of deep learning.

Introducing Yann LeCun: Pioneer of Deep Learning and Modern AI#

Yann LeCun is a leading figure in the world of artificial intelligence, best known for founding many of the foundational ideas that power today’s deep learning systems. Originally from France, LeCun’s work has focused on teaching machines to see and understand images much like humans do, making huge strides in computer vision and machine learning.

His most famous contribution is the invention and development of convolutional neural networks (CNNs), a special type of artificial neural network designed to process visual data efficiently by automatically learning patterns such as edges, textures, and object parts. This work led to the creation of LeNet-5, one of the earliest practical CNNs, which was used to automatically read handwritten digits, receiving wide adoption in applications like automated check reading by banks in the 1990s.

However, LeCun’s influence reaches far beyond LeNet-5. Early in his career during the 1980s, he was pivotal in adapting the backpropagation algorithm—a method for training neural networks—making it practical for real-world problems. He also co-created the MNIST dataset, a widely used benchmark of handwritten digits that remains crucial for evaluating machine learning models today.

In addition, LeCun contributed to the development of DjVu, a document compression technology important for digital libraries and document storage. His work continuously bridges theoretical research and practical applications, advancing fields like unsupervised learning, where machines learn patterns from unlabeled data, and reinforcement learning, which teaches machines to learn from trial and error.

LeCun’s visionary insights earned him several prestigious awards, including the 2018 ACM A.M. Turing Award—known as the “Nobel Prize of Computing”—which he shared with Geoffrey Hinton and Yoshua Bengio for their collective breakthroughs in deep learning. In 2025, he was honored with the Queen Elizabeth Prize for Engineering for his fundamental contributions to modern AI.

As Chief AI Scientist at Meta (formerly Facebook) and professor at New York University, LeCun continues to push AI forward. He critiques current limitations of existing AI models, advocating for new approaches based on “world models” and self-supervised learning, aspiring to create machines that understand and reason about the physical world more like humans do.