Why Do We Reduce Problems?#

Imagine you’re faced with a new, tough problem in computer science. You’re not sure if you can solve it quickly, or even at all. Instead of attacking it head-on, a classic strategy is to relate it to other, already-studied problems. If you can show that solving your hard problem would allow you to solve another well-known hard problem, you’ve learned something powerful about its difficulty.

This is the idea behind reductions: turn every instance of one problem into an instance of another problem so that solving the new instance solves the original. It’s like translating languages: if you can translate every word in English directly into French, you know the languages are closely related. In algorithms, reductions let us rigorously compare the hardness of different computational problems.

P, NP, and Problem Types: The Big Picture#

Let’s organize the world of computational problems:

P (Polynomial Time): Problems for which we can find a solution “quickly” (that is, in time $O(n^k)$ for some $k$), e.g., sorting a list, finding the shortest path in a graph.

NP (Nondeterministic Polynomial Time): Problems where, if you’re given a solution, you can check it quickly—but you might not know how to find it quickly.(Example: Given a Sudoku solution, it’s easy to check if it’s correct, but solving a new puzzle from scratch might be hard.)

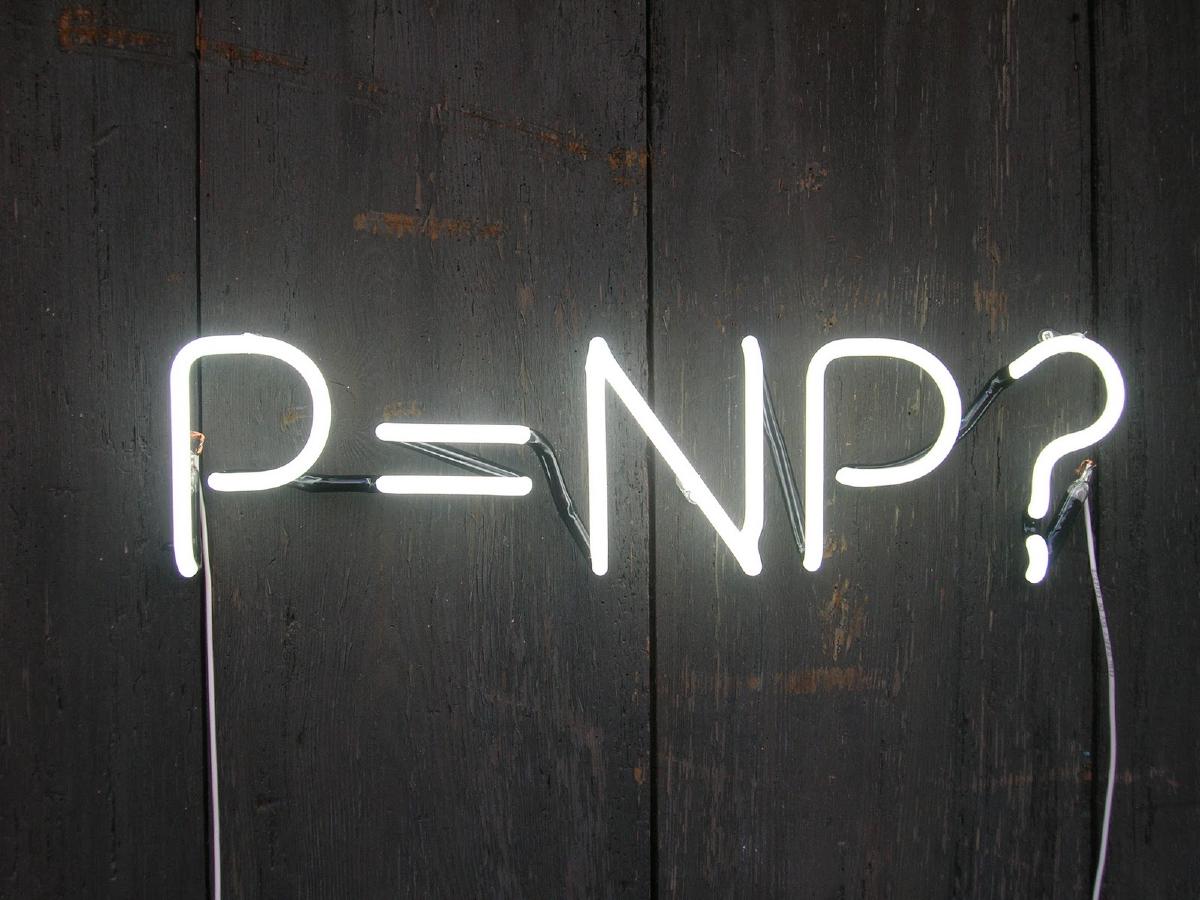

NP-Complete: The “hardest” problems in NP. If any of these can be solved quickly, then every NP (and thus P) problem can be solved quickly ($\mathbf{P} = \mathbf{NP}$, which is the biggest open question in theoretical CS).

NP-Hard: Problems at least as hard as NP-complete, but they don’t have to be in NP (you might not even be able to check their solutions efficiently).

Why does all this classification matter? It gives us a scientific way to reason about which problems are likely to have efficient algorithms and which ones aren’t—an essential guide for algorithm designers and computer scientists everywhere.

The 3-SAT Problem: What You Need to Know#

3-SAT is a famous logic puzzle at the heart of NP-completeness. Here’s what you actually need to know:

Problem Statement:

Given a formula, written as ANDs of ORs, with each OR containing exactly three variables or their negations (called “literals”), can you pick TRUE/FALSE values for all variables to make the entire formula TRUE?Example:

$$ (x_1 \vee \neg x_2 \vee x_3)\; \wedge\; (\neg x_1 \vee x_2 \vee x_3) $$The question: does there exist an assignment to $x_1, x_2, x_3$ such that both clauses evaluate to TRUE?

Why do we always start with 3-SAT? Because it’s like the “universal skeleton key” for NP-completeness—if you can show your problem is at least as hard as 3-SAT (using a reduction), you’ve proven NP-hardness.

The Reduction: From 3-SAT to 3-Coloring (Core of the Proof)#

Problem Definitions#

- 3-SAT: As above—find a satisfying assignment for a given set of clauses, each with exactly three literals.

- 3-Coloring: Given a graph, can you color each vertex with one of three colors so that no two adjacent vertices share a color?

Why Reduce 3-SAT to 3-Coloring?#

If we can show that any 3-SAT problem can be transformed (i.e., reduced) into a 3-Coloring problem in polynomial time, we prove that 3-Coloring is at least as hard as 3-SAT, and thus is also NP-complete.

Gadget Construction (How the Reduction Works)#

Palette Triangle:

We start with three special vertices—call them $T$ (TRUE), $F$ (FALSE), $B$ (BASE)—form a triangle (they must have distinct colors).

Variable Gadget:

For every variable $x$:

- Make two vertices: $v_x$ (for $x$ true), $v_{¬x}$ (for $x$ false).

- Connect $v_x$, $v_{¬x}$, $B$ in a triangle. This forces one to be TRUE, one to be FALSE.

Clause Gadget:

For every clause $(a \vee b \vee c)$:

- Add a new vertex $C$.

- Connect $C$ to $F$, $v_a$, $v_b$, $v_c$.

ASCII sketch of a clause gadget:

v_a v_b v_c

\ | /

\ | /

(C)

|

F

- $C$ can’t be colored $F$ (since it’s adjacent to $F$).

- If all $v_a, v_b, v_c$ are FALSE, no available color for $C$, so the only way to color the graph is for every clause to have at least one TRUE literal.

Full Logical Flow (Mathematical Summary)#

- If the 3-SAT formula is satisfiable, the coloring exists because you can set the gadgets according to the satisfying assignment.

- If the graph is 3-colorable, reading the TRUE/FALSE values from the variable gadgets gives a satisfying assignment.

Thus, a solution to the 3-Coloring graph corresponds exactly to a solution to the 3-SAT formula.

Why Go to All This Trouble? (Reflection)#

By performing this reduction, we achieve:

- Classification: We show 3-Coloring is “just as hard as” 3-SAT, and thus NP-complete.

- Tools: You can now prove other problems are hard by reducing 3-SAT or 3-Coloring to them—they work as modular building blocks.

- Insight: Seemingly different worlds (logic, graphs) are deeply related: logical formulas and graph colorings are two faces of the same computational coin.

For practical algorithm design, this means:

- Unless $\mathbf{P} = \mathbf{NP}$, don’t expect a fast, always-correct algorithm for general 3-Coloring, but look for special cases, heuristics, or approximations.

Understanding and designing reductions is at the heart of theoretical computer science—and gives us the tools to recognize, reason about, and explore the world of computational difficulty.

References#

- Garey, M.R., & Johnson, D.S. Computers and Intractability: A Guide to the Theory of NP-Completeness. W. H. Freeman, 1979.

- Sipser, M. Introduction to the Theory of Computation.

- Wikipedia: Boolean Satisfiability Problem

- UIUC Course Slide: 3-Coloring and SAT

- GeeksforGeeks: 3-Coloring is NP Complete